This summer, I, along with Phipson, did a "design for everyone" project, which is to say, we built a system called FAVECAD (Fabrication in Angumented and Virtual Environments for Computer-aided Design) to help everyone (including non-designers) to design.

This system combines advantage of AR and VR together to make non-designer easier and more comfortable to design - in our example case - is to design parameterized furniture in real or virtual room.

Our system is easy to learn so users don't need to be CAD experts or engineer to design. They can use AR to scan the room and use VR to have an immersive sense of their design, and then intuitive gestures help them modify furniture parameters easily. The output file is manufacturable so we actually let user see the model product of their design, as shown in the picture below.

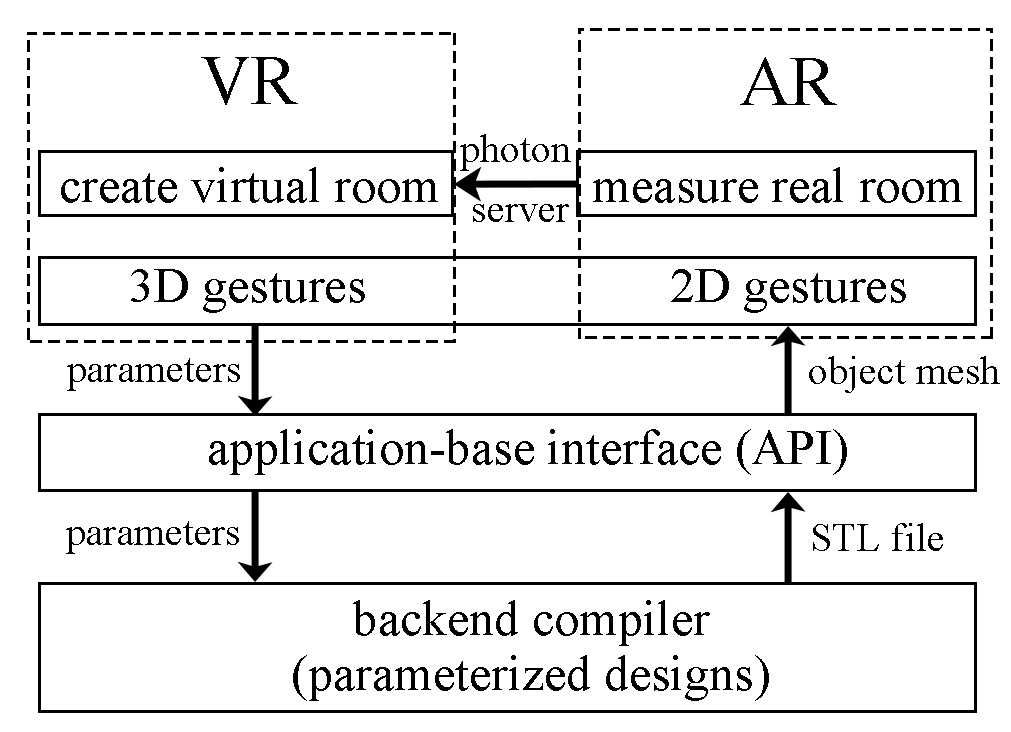

The system is made of backend compiler, API, and AR/VR software and equipments. The relationship between these elements is shown below:

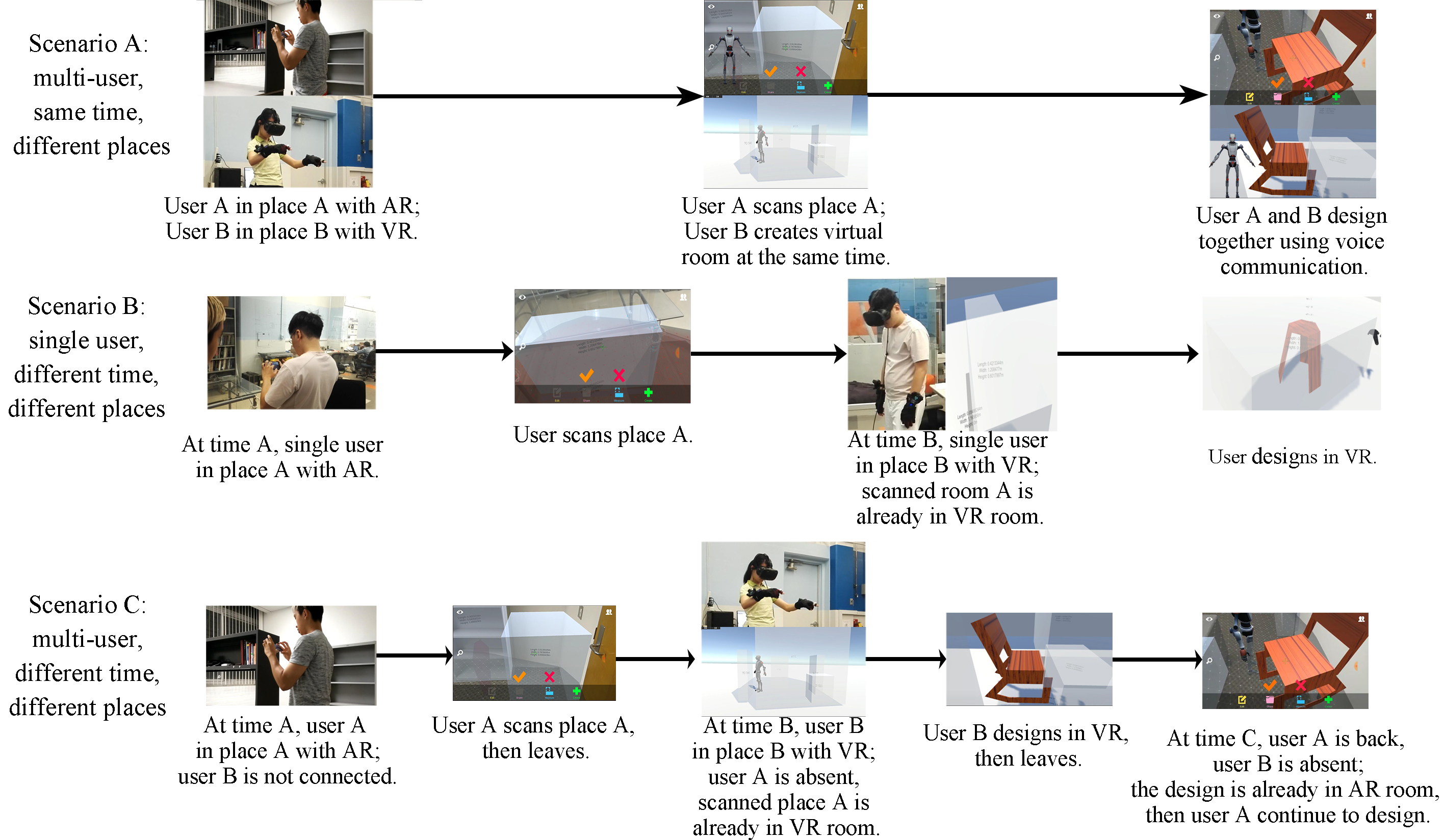

We created three different scenarios as application of the system:

- multi-user, same time, different places

- single user, different time, different places

- multi-user, different time, different places

The following picture explains these three scenarios a little bit more detailed:

We have invited ten people to test on and experience our system, and we have mostly positive feedbacks. And also our output manufactured furniture fit quite well with the dog-house model of the real room, also suggested that our system works fine.

And finally, we have written a paper and recorded a 6-min video for CHI2019 about the system we created this summer.

Timeline of previous weeks

- Sep 10-21: do user study, mainly work on paper and video.

- Aug 30: start final debugging and paper writing, plan on user study.

- Aug 25-29: test and debug glove gestures.

- Aug 24: successfully use photon server to connect AR and VR.

- Aug 17-23: research on 3D reconstruction in VR, photon server and gesture detection.

- Aug 16-17: set up new graphics card; debug API and UI interface in VR.

- Aug 10-15: finish API, and debug UI interface using controller pointer.

- Aug 8-9: research on how to install local server onto lab computer, and research on API.

- Aug 6-8: set up HTC Vive and struggle with current graphics card.

- Aug 4-5: learn about API.

- Aug 3: arrive in campus, set up MR, install software like Unity and steamVR.