At first, I started with the okvis project to build my own BOEM SLAM. After spending several weeks understanding what is going on in that well-established project, I realized that there is not way to develop and to test on that project. What I need is to build a project with smaller scale that I can play around with, while using okvis as a resource.

In terms of downscaling, I decided to

- separate the frondend (image processing) and the backend (fusing proprioceptive and exteroceptive information),

- focus only on a chunk of data.

This week, I built a prototype of the frontend consuming 19 images. The output of the frontend is a csv-style file that provides observation data. As a result, the system does not have to process images directly. We can also now work in parallel to develop both modules, and test the BOEM SLAM asap.

Feature extraction

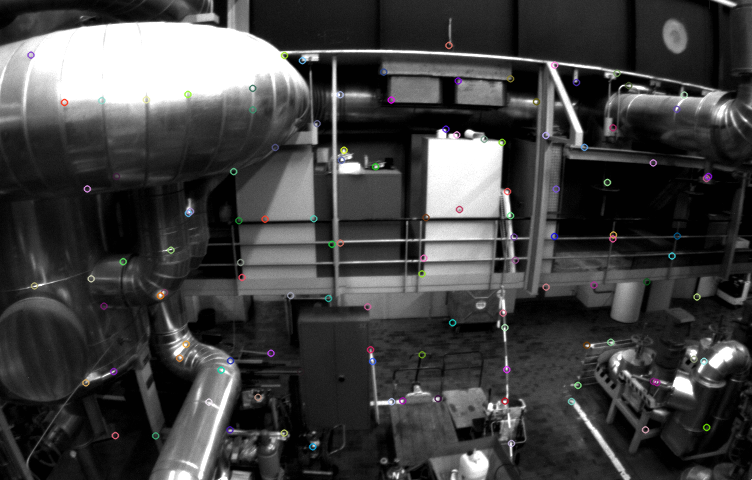

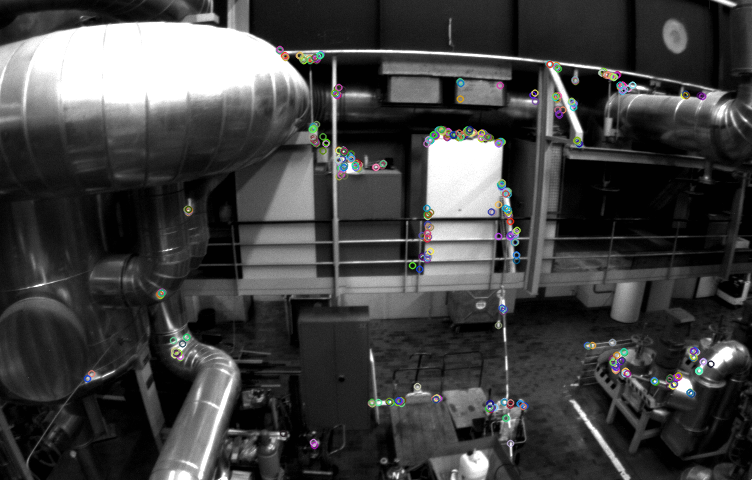

The first step in SLAM frontend is feature extraction, and there are many options. I simply tested BRISK and ORB, since they are used in modern SLAM algorithms. The following are the same image with different extracted features.

I go for BRISK, since it seems more spread out.

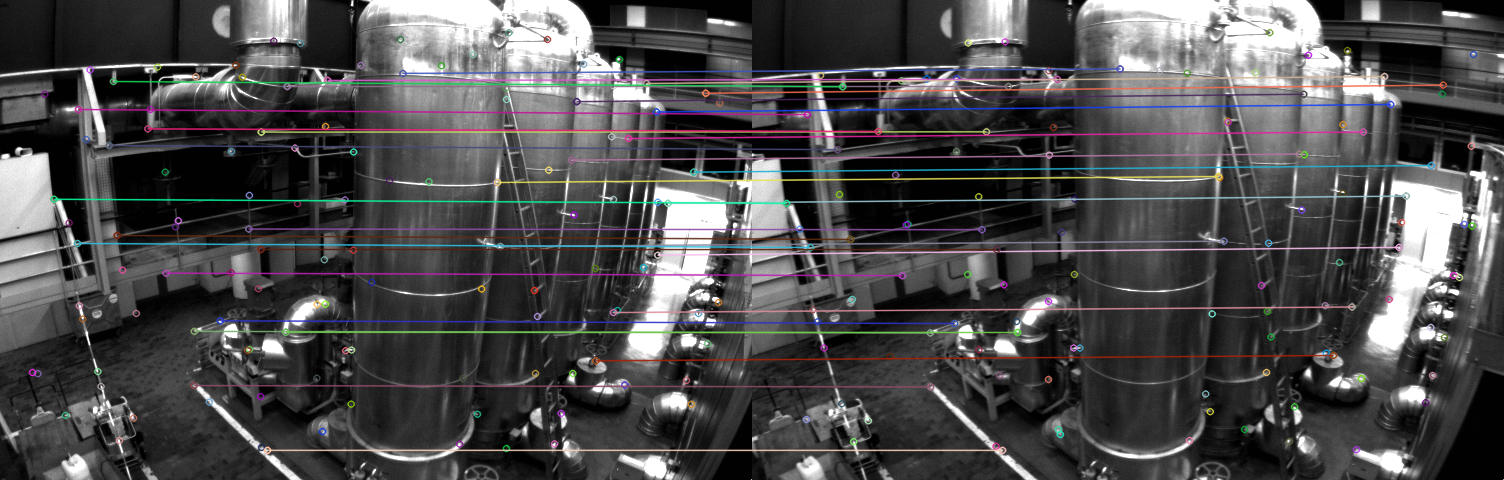

Feature matching

Before feature matching, I discarded those features with large distance. The matching is simply done by brute force between consecutive images. I checked by my own eyes that there is not mismatching.

Observation data output

I then output the matched features in a file that can be later process by the backend. For slight nomenclature, landmark refers to a 3d point, while feature refers to the observed landmark in the 2d image. The output file follows the convention used in ceres tutorial.