Background

- Whole question to solve: How to use 3D gesture to change 3D design parameter

- Break down to two smaller questions:

- How to map 3D gesture to 3D object geometry

- How to determine 3D design parameter from object geometry

Challenge

- Ambiguity in gesture

- Different designs have different parameters

- Different user have different understanding of gesture

Related works

(How do other works manipulate 3D object geometry)

- FabByExample (2014)

- 2D interface (keyboard-mouse)

- select leaf node in hierarchical tree structure and drag to change

- => not applicable to our system (no leaf node, not 2D interface)

- Voodoo Dolls (1999), MixFab (2014), etc

- Explicitly define which gesture to change what parameter

- => unextendible (different parameter for different object)

- Posing and acting as input for personalizing furniture (2016)

- voice command, combined with hand posture with regard to body scale

- => not consider voice command for now (complicated to implement, voice recognition and ambiguity in voice commands can lead to more challenges)

- Machine learning

- => not consider for now (complicated to implement, need to check if there is existing dataset)

Method

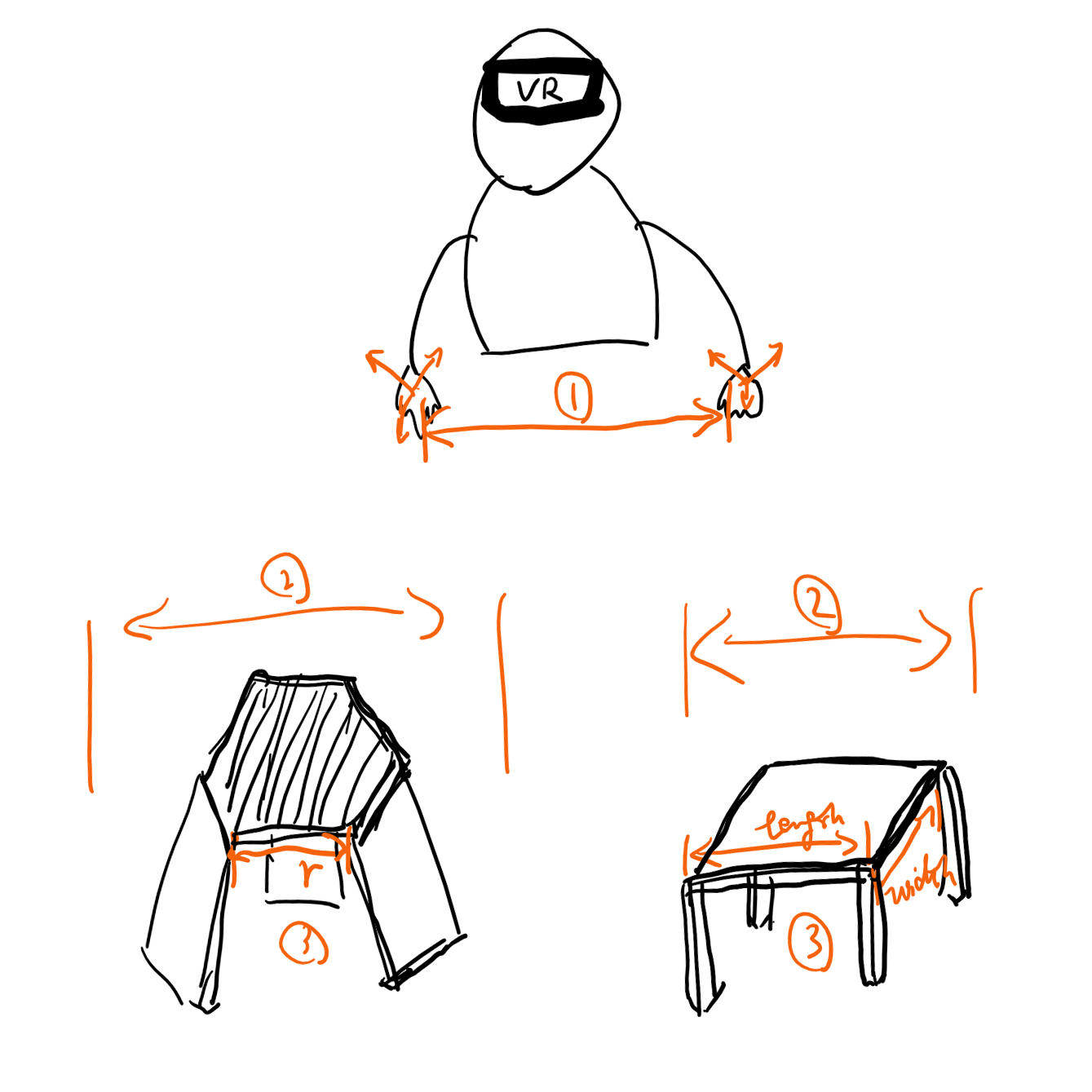

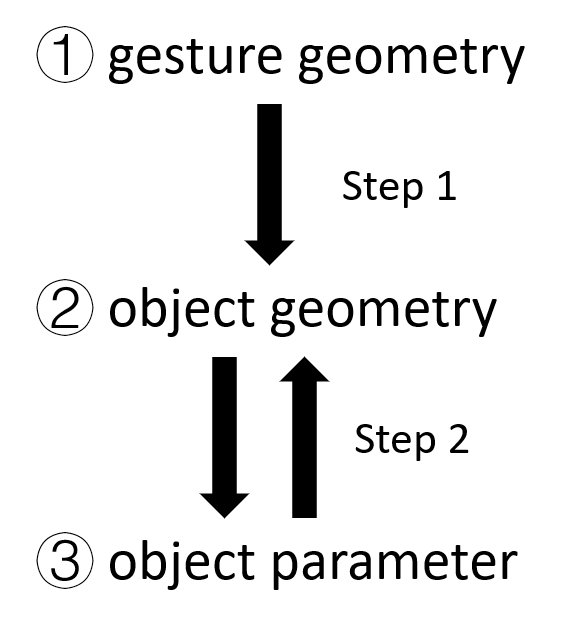

2-step pipeline:

-

The reason why it is helpful to use object geometry as a middle point between gesture geometry and object parameter:

- the abstract geometry of any object can be classified into: plane, line, point

- so the object geometry classification remains the same and limited, while object parameter is different among objects

- when the information from gesture and object are both geometry (in terms of plane, line, point), there are some similaries that could help the mapping of step 1.

- this similary is:

- for plane: 3D position, normal direction

- for line: 3D position, direction

- for point: 3D position

-

The reason why it is challenging to map gesture geometry to object geometry:

- the object doesn't know which geometry is selected to change, especially when the geometry of object is complicated (irregular shape, concave shape, internal faces, etc)

- the object doesn't know what kind of change and how much change the user wants for the object (through the gesture)

-

The reason why it is challenging to map object geometry to object parameter:

- object geometry is very flexible, while object parameter is a limited set of parameters that would ensure fabricability

- some object geometry may not has corresponding object parameter, so how to update object parameter to achieve what the user want as closely as possible

- how to define this "closeness"

- how fast the mapping or search is when updating parameter to model intended geometry change

Contribution

- (overall: ) bridge the gap between 3D gesture and object parameter (with constraints of the gesture is not explicitly pre-defined and is as intuitive as possible, the modification method of object is extendable)

- (in step 1: ) propose an approach to map gesture geometry to object geomtry (in a relatively intuitive way)

- (in step 2: ) propose an approach to map object geometry to object parameter (in a relatively effective way)

Key idea in the method

Objective: adapt DoF

- Hand DoF:

- palm: position + rotation (6 DoF)

- 5 fingers (simplified): point in/out (5 DoF)

- single hand DoF in use: 11 DoF

- two hands total DoF in use: 22 DoF

- 3D gesture geometry DoF:

- palm plane: position + normal direction (6 DoF)

- line: position + direction (6 DoF)

- point: position (3 DoF)

- single gesture geometry DoF in use: 15 DoF

- two hand gesture geometry DoF in use: 30 DoF

- 3D object geometry DoF:

- for each mesh: plane (6 DoF) + line (6 DoF) + point (3 DoF)

- total DoF: 15 \(\times\) mesh number

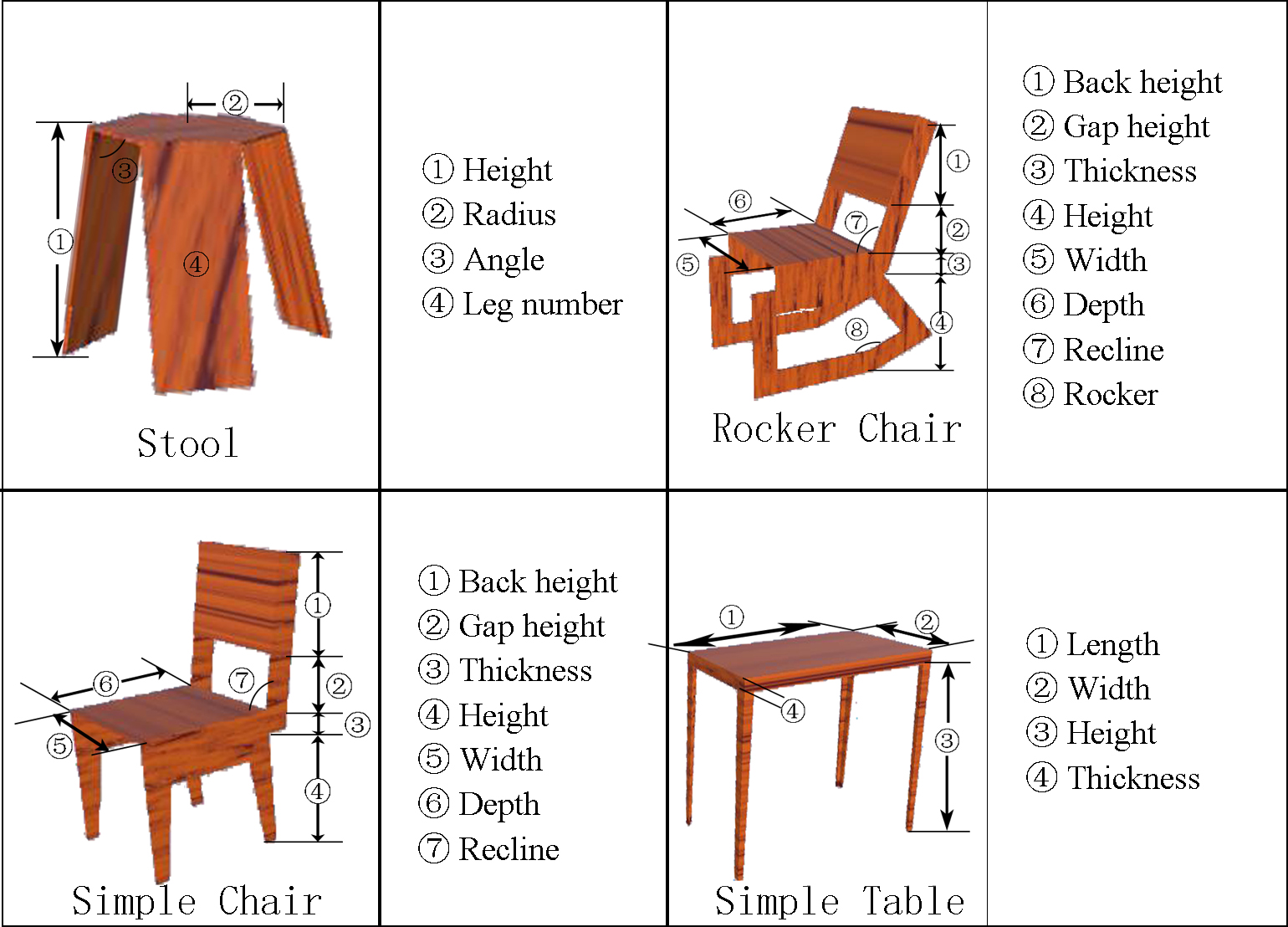

- object parameter DoF:

- limited, different for each object => generally 10-ish DoF for each object

- detailed parameter list example as follows:

so the challenge is to adapt: hand (22 DoF) -> gesture geometry (30 DoF) -> object geometry (15 \(\times\) mesh number DoF) -> object parameter (10-ish DoF)