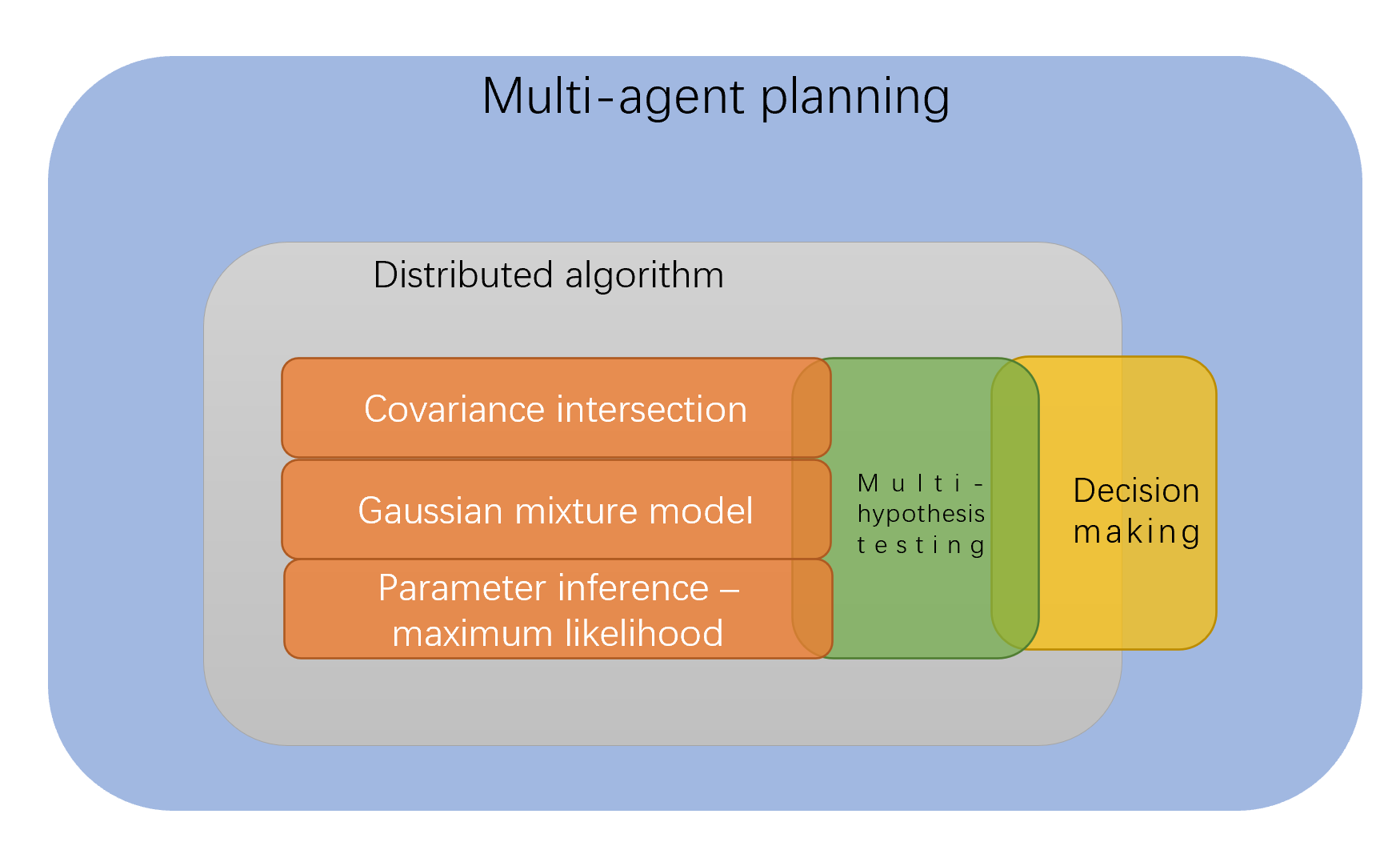

I've been thinking about the next direction I can put into my DARPA paper. I'm puting some ideas together, the overall framework is that I would like to solve a multi-agent planning problem:

Here are some relative concepts that tight into this problem:

Multi-agent planning: multiagent planning is concerned with planning by (and for) multiple agents. It can involve agents planning for a common goal, an agent coordinating the plans (plan merging) or planning of others, or agents refining their own plans while negotiating over tasks or resources. The topic also involves how agents can do this in real time while executing plans (distributed continual planning). Multiagent scheduling differs from multiagent planning the same way planning and scheduling differ: in scheduling often the tasks that need to be performed are already decided, and in practice, scheduling tends to focus on algorithms for specific problem domains"

An agent is anything that can be viewed as perceiving its environment through sensors and acting upon that environment through actuators.

Distributed Artificial Intelligence (DAI) is an approach to solving complex learning, planning, and decision making problems. It is embarrassingly parallel, thus able to exploit large scale computation and spatial distribution of computing resources. These properties allow it to solve problems that require the processing of very large data sets. DAI systems consist of autonomous learning processing nodes (agents), that are distributed, often at a very large scale. DAI nodes can act independently and partial solutions are integrated by communication between nodes, often asynchronously. By virtue of their scale, DAI systems are robust and elastic, and by necessity, loosely coupled. Furthermore, DAI systems are built to be adaptive to changes in the problem definition or underlying data sets due to the scale and difficulty in redeployment.

The multiple hypothesis testing problem occurs when a number of individual hypothesis tests are considered simultaneously. In this case, the significance or the error rate of individual tests no longer represents the error rate of the combined set of tests.

multiple testing problem occurs when one considers a set of statistical inferences simultaneously or infers a subset of parameters selected based on the observed values.

Covariance intersection is an algorithm for combining two or more estimates of state variables in a Kalman filter when the correlation between them is unknown.

A Gaussian mixture model is a probabilistic model that assumes all the data points are generated from a mixture of a finite number of Gaussian distributions with unknown parameters.

Previous problem

The problem that we previously want to solve:

Extend to distributed multi-agent systems with interaction, assuming each agent can be predicted by the same sensing planning algorithm but knows only information of its own sensors.

In the agent network with unknown agents, to be able to estimate the local error probability in a distributed manner, what is the minimum information limit of other agents required to know? Given this information, how can the local sensor planner adjust and more accurately estimate the influence of its own error probability on the network?

New idea:

Can we extend this distributed multi-agent system to the blimp project.