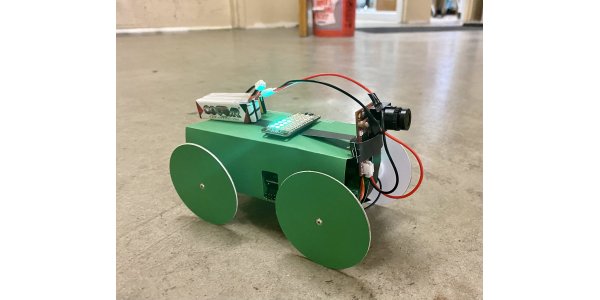

Integrating an OpenMV camera to an origami car to create visual feedback control

Jillian Naldrien Pantig origami robots rocolib tank RoCo cars vision processing visual openmv arnhold

During SURP 2021’s week 8, Bhavik Joshi (Team Arnhold), Marisa Duran (Team Arnhold), and I managed to integrate the vision processing and feedback controlled cars epic. Using Marisa’s OpenMV code and my code for controlling the cars, we were able to put an OpenMV camera in one of my four-wheeled origami cars (made using RoCo) for feedback control such that the car moves forward, backward, left, or/and right in response to a color blob in front of it. Bhavik played a huge role in the fusion of the vision processing and origami cars epic as he mainly organized/planned the integration of the two.

In one of the gifs below, it can be seen that we used a red cup to serve as the color blob. However, this was not our first choice as we originally opted for an april tag for the camera to detect. We noticed that an april tag will not satisfy the way we want the car to move for our integrated demo next week Monday, August 16, 2021, which is having another car carry a target (april tag or color blobs) that enables another origami car with a camera to follow it.