This blog is a bit technical, but here it goes.

The last blog focused on the write up side of my project, but this time, I will focus on how I attempted to replicate my 1/8th scale autonomous RC car results for my 1/32 scale vehicles.

-Data Collection-

The image above is an image straight from the Kogeto Dot Lens, which shows a 360 panoramic view of its environment. This image isn't centered, so an opencv2 function crops the images to only display the information we want. Because if we think about it, the only state information we need is just the donut image around the lens.

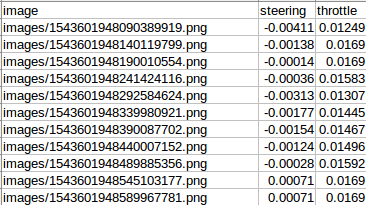

Data is collected from the Robot Operating System and the Optitrack Motion Capture System, which gets timestamped and sorted out as shown below. We collected around 15 minutes of training data. Everytime a crash event happened, the throttle was maxed to freespin the rear wheels, which was then used as an indicator to crop out the data after the experiment.The data parsing was done by my project teammate Kevin, who will probably blog about it on his blog, and it was based off of the previous parser for the 1/8th scale project.

-Keras in Tensorflow-

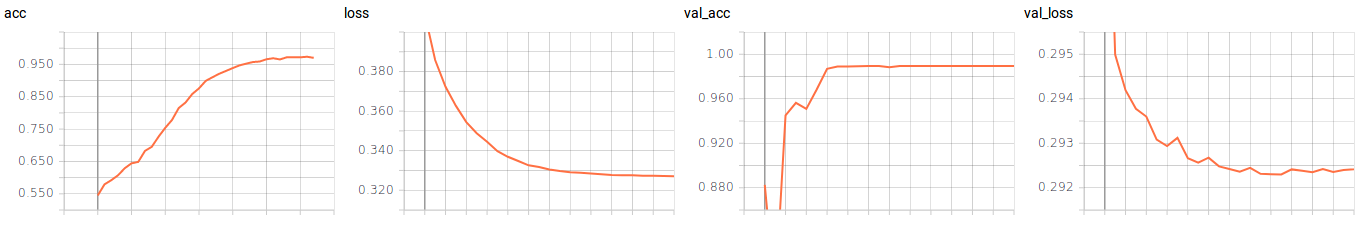

The model was generated in Keras, a plug-in for Tensorflow. The images you see below represent the training set and validation set accuracy and loss on TensorBoard. There were a few key insights I obtained while constructing the 3d cnn model for the car, which I will list below. We ended up having an accuracy of 99% on the test set and 98% on the training set, so results were pretty good.

-First Insight: Only Two 3D convolutional layers are sufficient enough to find a relationship between the vehicle images and steering/throttle values. This insight was interesting because I had previously used five 3D.conv layers on my 1/8th scale vehicles.

-Second Insight: 160x160 images creates more accurate inferences than 200x200 images using the same model. This is probably because 200x200 images need deeper networks and 160x160 images sufficiently capture the state of the vehicle.

My next blog will focus on deploying this model and optimizing the model to insure that the vehicle can run fast and robustly. Stay tuned!