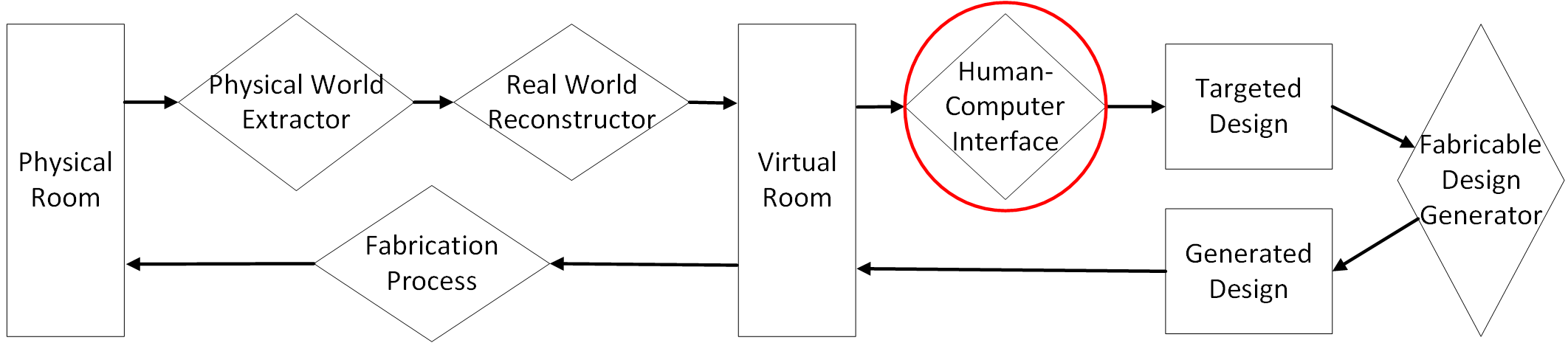

Background

Sub-system: human-computer interface

Whole objective of the whole system: a novice user could use intuitive 3D hand gesture to express their 3D design ideas on fabrication- and environment-aware designs.

Sub-objective of the sub-system: to answer to the key words "novice" and "intuitive" in the whole objective.

General sub-question to answer using this sub-system: how to reflect design intent on object geometry using 3D free-hand gesture

Specific sub-question to answer based on the "general sub-question": how to use natural/intuitive 3D gesture to manipulate/edit a virtual 3D object

Break down the problem

When a designer uses a free-hand gesture to manipulate/edit an object, the design information flow is like this:

Design intent \(\rightarrow\) Hand gesture \(\rightarrow\) Object geometry

- The designer uses hand gesture to express their design intent

- The system/design object should be able to guess this design intent based on their gesure, and use this estimated design intent to modify object geometry

Challenge/Contribution

What gesture is natural/intuitive to express design intent:

The challenge is that the user intuition varies, so there is no clear definition of how to evaluate the level of natural/intuitive.

Here are some steps to approach the challenge and transform it into a contribution:

- A (pseudo-)user-study to investigate how people would involve with a virtual/real 3D object

- An observation that extracts the most common intuition about using gesture to express design intent

- Implement gesture manipulation commands based on the observation of design intuition

- A proof-of-concept user study to test on the "intuitiveness" of the implemented gesture commands

What kinds of manipulation is allowed:

The most common manipulations are: selection, translation, rotation, scaling

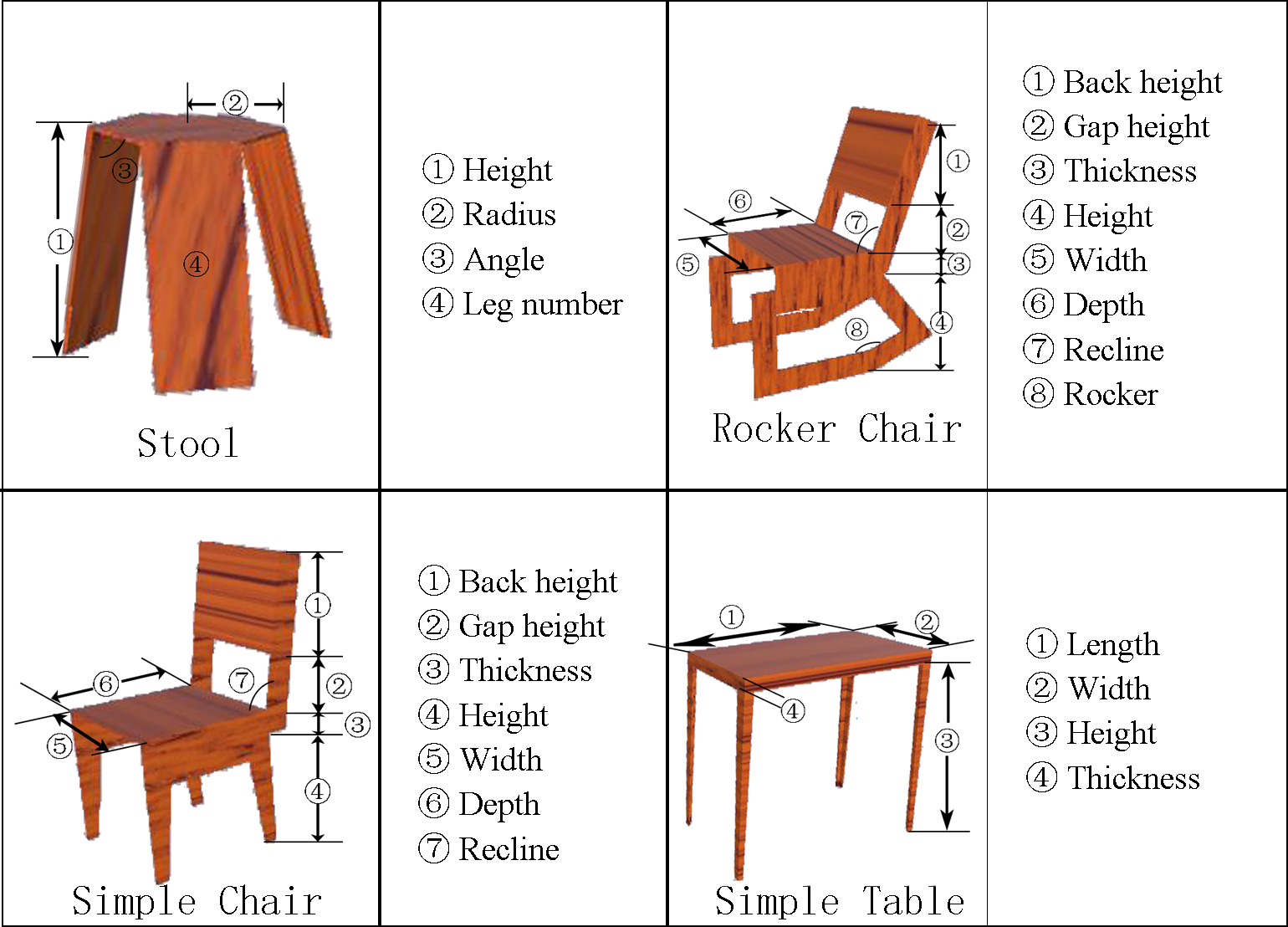

The following manipulations based on our backend compiler (which would ensure the design object is fabricable and allow a certain level of design flexibility) is something unique and can be considered as our contribution:

- Partial scaling

- Angle between components

- Change of discrete property

Partial scaling

e.g. For rocker chair, there are four parameters that are related to height: 1) back height, 2) gap height, 3) thickness, 4) height. So our gesture manipulation should be able to change one of them instead of only scaling the whole height.

Angle between components

e.g. For rocker chair, the user's design intent might be environment-related, for example, when the chair rocks, the back should not hit the wall. But the rocking angle of the chair is not controlled by a single angle but controlled by two angle parameters together: 7) recline, 8) rocker.

So our system should be able to recognize user's design intent and modify both parameters and find the best combination that follows this dynamic design intent.

This kind of dynamic estimation and environment-aware design process is rare in current design tools.

Change of discrete property

e.g. For stool, the leg number can be changed from 2 to infinity, which is a rare manipulation in current existing design tools.

Next steps

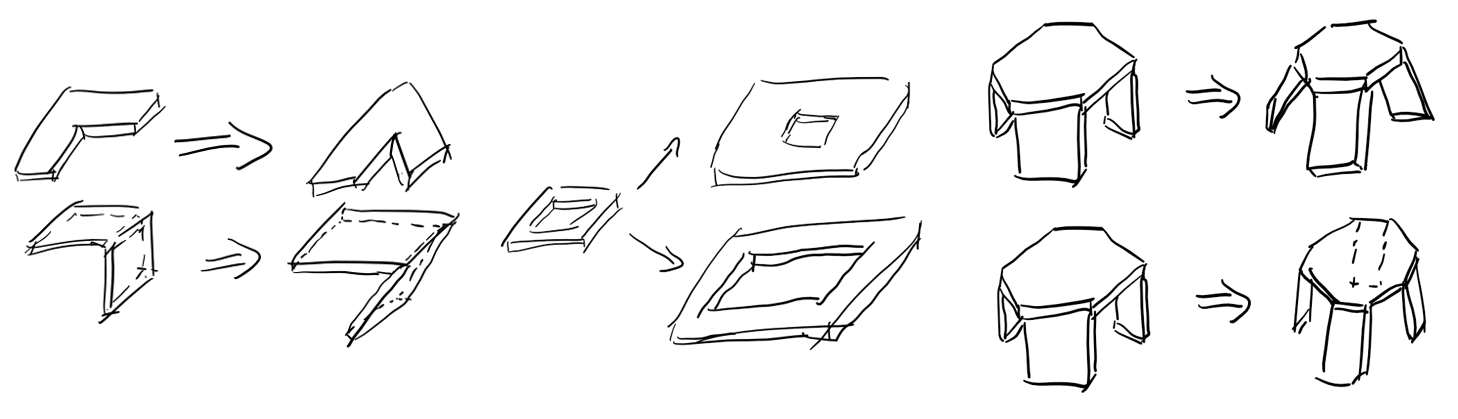

- Come up with a list of questions/demos/videos for (pseudo-)user-study, to investigate design intuition on these three types of manipulation.

For example, here are some rough thoughts about simplified manipulation compared to complicated furniture object but still might demonstrate our manipulations:

- Observe user's design intuition when they are asked to use gesture to execute these modifications

- Implement gesture commands based on the observation