20

Jan

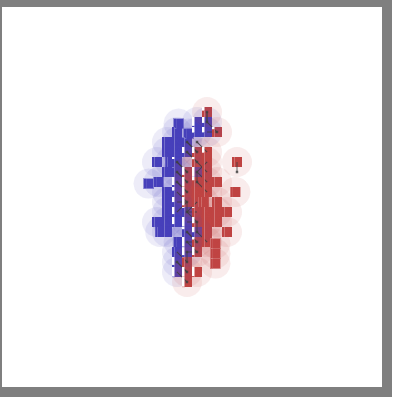

Test result: Mean-field Multi-agent Reinforcement Learning Platform

Zida Wu reinforcement learning; multi-agent; mean-field;Paper Target: IROS 2022

Current open source mean-field reinforcement learning code, which is based on MAgent

Blue: Group 1 (Proposed Algorithm)

Red: Group 2 (Opponent)

| Algorihtm | Information | Collision | Task |

|---|---|---|---|

| Current | using all agents as neighborhood | One state (position) only one agent | Homogenous |

| Our proposed | only using the closest neighborhood | Allowing overlapping | Heterogenous |

Next Step

- Step 1: Deal with information problem: using only closest neighborhood information

- Step 2: Deal with collision problem: allowing overlapping

- Step 3: Deal with task types problem: add heterogenous attributes.

Term illustration:

- A round is the one batch training in multi-agent neural network. All algorithms are trained by 1599 rounds.

- MF-AC: Mean-Field Actor-Critic algorithm

- MF-Q: Mean-Field Q-learning algorithm

- IL: Individual Q-learning algorithm

- AC: Individual Actor-Critic algirhtm

All experimental results fit the results in original paper: Mean field multi-agent reinforcement learning, which should be MFQ>IL>MFAC>AC. Explanation could be founded in original paper.

MFAC Group VS AC Group

MFAC Group VS IL Group

MFAC Group VS MFQ Group

MFQ Group VS IL Group