a new approach to capturing balloon in 3D space with a blimp

Shahrul Kamil bin Hassan (Kamil) blimp autonomy visual processing i2cAfter the post-mortem from the previous competition, we started by identifying the small problems that we had to fix before integrating the whole system. There were multiple issues being handled both from the hardware and the software perspectives.

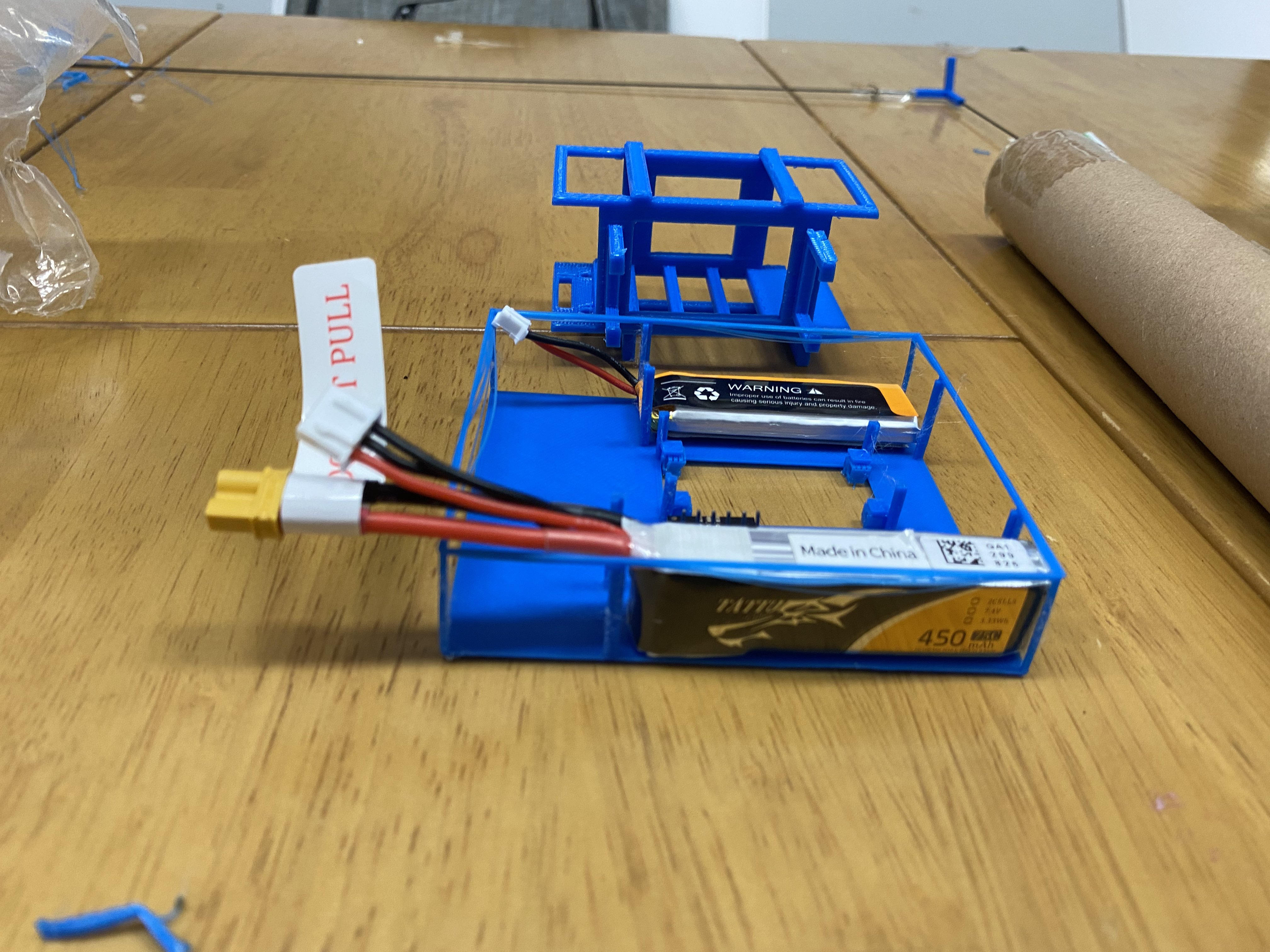

First, the main chassis that will hold the microcontroller and other electrical components need to be design in such a way that all the component fits perfectly without the need of taping and gluing on the chassis itself. This wasn't the case for the previous iteration. Because of that, and the fact that we had a small team for this project, we had to spend a lot of time setting up a single blimp, which prevents us from achieving our goal of having multiple autonomous blimps in the air. The MAE members are actively working on 2 different design of the chassis the would be able to hold the component without the need of additional taping.

Second, with our setup from the previous competition, we realized that the current DC motors that we are using is not enough to give the minimal mobility needed for the blimp to navigate around the field. In order to solve this problem, there's 2 possible approach: design the blimp so that it weighs less so that the current motor can move the blimp faster, or use a brushless motor, which will give the blimp more thrust. We decided to do the latter and is currently waiting for the materials to arrive so that we can replace the previous motors.

Third, the current architecture in which the blimp sends image back to a laptop, which then would process the image, is not our best solution. 2/3 teams that qualified during the November competition utilizes onboard visual processing to capture the green balloon. Because of that, this will be our approach. Initial color processing has been implemented where the ESP-EYE takes real life frames from the camera and assign RGB values to it and print out the results. The end goal here is for the camera to be able to do 4 things:

1. Identify the green balloon and provide the (x, y, size) information

2. Identify the apriltags and provide the 6 DOF information

3. Identify the goal (shape or color)

4. Send images for manual streamingFinally, we will be changing our approach for the green balloon catching algorithm. Instead of going forward and try to capture it, we want to place the basket below and try to catch the green balloon by descending onto it. Preliminary codes have been written and now we are waiting for the visual detection results.