Decentralized Multi-agent Reinforcement Learning System

This project is working for an decentralized multi-agent system based on reinforcement learning.

Project Member: Zida Wu

Current SOTA decentralized multi-agent reinforcement learning (Dec-MARL) algorithms mostly assume that, at least at training stage, agents are able to obtain global information (states or actions). This strong assumptions is inapplicable for real demands, such as large-scale multi-agent system, ad-hoc teaming, or agents deployed asynchronously.

In this project, we intend to propose a fully decentralized algorithm based on mean-field theory, in which agents' policies only depend on the local information of each agent both in training or execution stage. Moreover, rather than the homogeneity, our algorithm can handle with heterogeneous tasks, which means agents in the same field can have different intentions or be assigned into heterogeneous tasks.

In a brief,

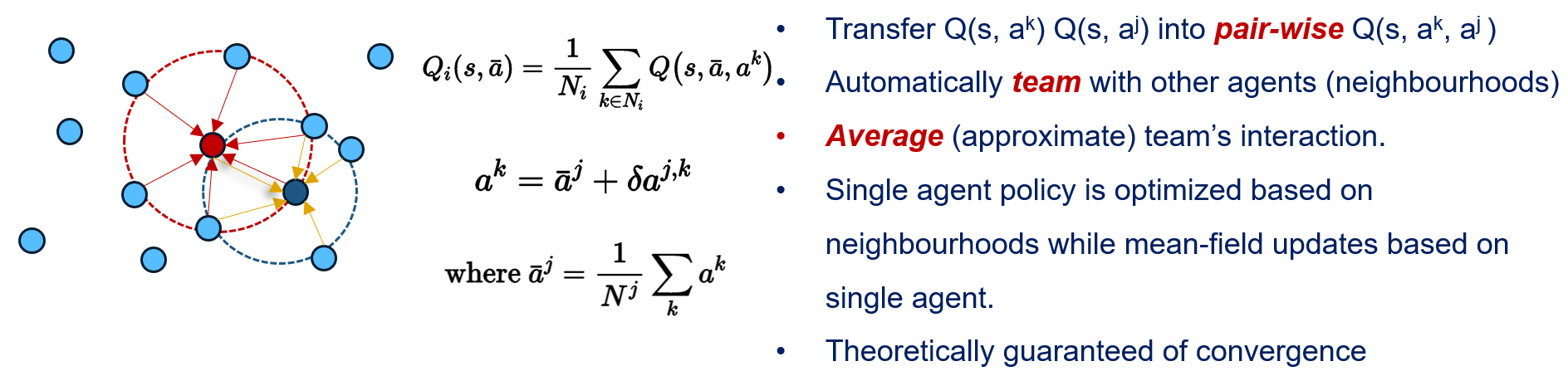

- we transfered Q-network/Policy-network based on individual state & actions into pairwise Q-network/Policy-network

- Using mean-field idea to approximate all neighbors behaviors

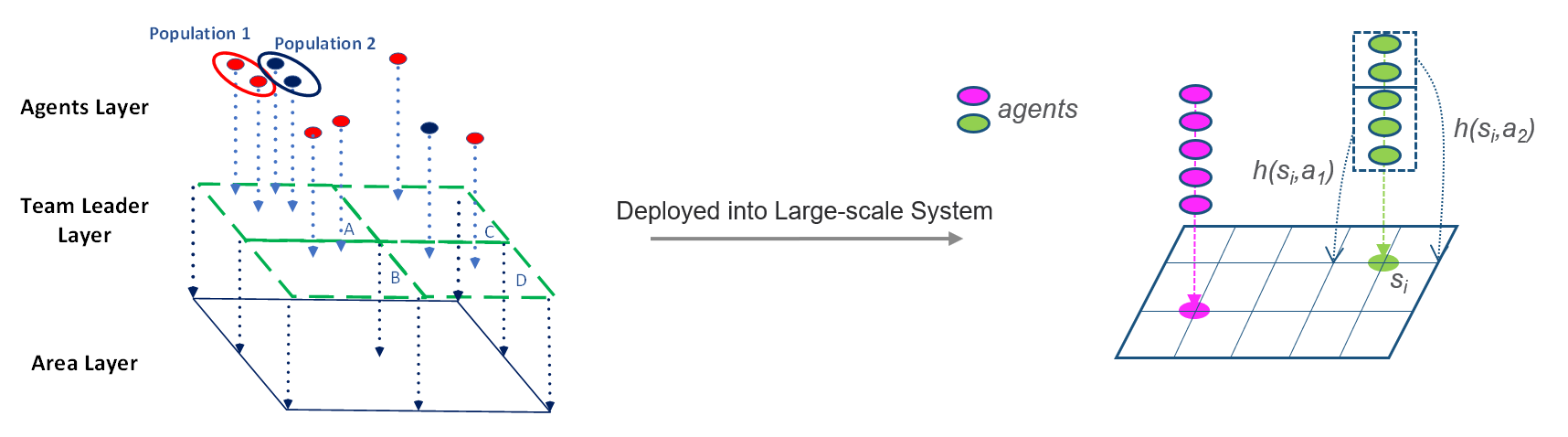

- Using state-based mean-field algorithm to coordinate large-scale agents instead of agent-based

- Allow heterogeous agents (divided into multiple populations) in this sytem.

The agent-based mean-field idea is as follows:

State-based mean-field idea is this:

More details please search key-words "mean-field" in this blog post page