SLAM for all Autonomous Agents

Building SLAM modules with performance guarantees

Autonomy is the ultimate goal in robotics. As a premise, autonomous agents should be able to self-localize with respect to the environment and construct the environment map concurrently. Such ability, coined as simultaneous localization and mapping (SLAM) in robotics, was believed to be solved last decade. However, with the progress in deep learning and neuroscience, we would like to bring new insights to this dormant problem in order to pave the path to build a fully autonomous system.

In traditional robotics, with the introduction of probabilistic method, SLAM is modeled as an estimation problem. That is, SLAM is achieved by estimating the agent’s position as well as the landmarks’ based on odometry and observation information. Along the estimation-based method, the first established SLAM algorithm is based on extended Kalman filter (EKF), and FastSLAM is later proposed and becomes mainstream by relieving the assumption of linearity and Gaussian noise in EKF method. Instead of casting as estimation problem, SLAM can also be tackled by optimization-based method. Graph SLAM, for example, regards odometry and observation information as constraints, and the agent trajectory is the optimized result that fits those constraints. Beyond robotics, SLAM is also solved by deep learning as an optimization problem. Often modeled as reinforcement learning, SLAM is solved implicitly while the agent is performing navigational tasks.

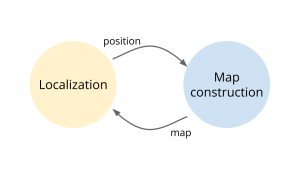

Estimation-based and optimization-based methods both have their own advantages. For the former one, the algorithm has instantaneous output and provides map explicitly. One the contrary, optimization methods consider physical constraints and can be easily incorporated with the higher-level navigational tasks. Two methods are merged at the proposal of EM SLAM. In particular, in EM SLAM, localization and map construction are solved as two interdependent subproblems, which correspond to estimation and optimization, respectively.

Complementary to robotics that tries to build an autonomous system from scratch, neuroscience studies the existing autonomous agents to investigate the computational principles beneath the behaviors. There are several neurons with spatial selectivity found in mammalian brains, place cells in hippocampus and grid cells in entorhinal cortex particularly. The spatial representation encoded in those neurons is insightful for engineering design, and the whole architecture that facilitates SLAM can be also applied in robotics. Recently, some artificial neurons in deep network are found to behave similarly to the grid cells in navigational tasks, which further suggests a unifying SLAM principles among artificial and biological agents.

To build a fully autonomous agent as a ultimate goal, we think it is vital to build a SLAM module with performance guarantee and the interface to be integrated with higher level tasks, such as navigation. The followings are the important problems:

- Localization: nonlinear filter, filtering on Lie group, spatial representation

- Map construction: online EM SLAM, theoretical characterization, the interface between SLAM and navigation

- Navigation: uncertainty and cost characterization